Generalizing Wave Gestures from Sparse Examples for Real-time Character Control

ACM Transactions on Graphics (TOG), 2015.

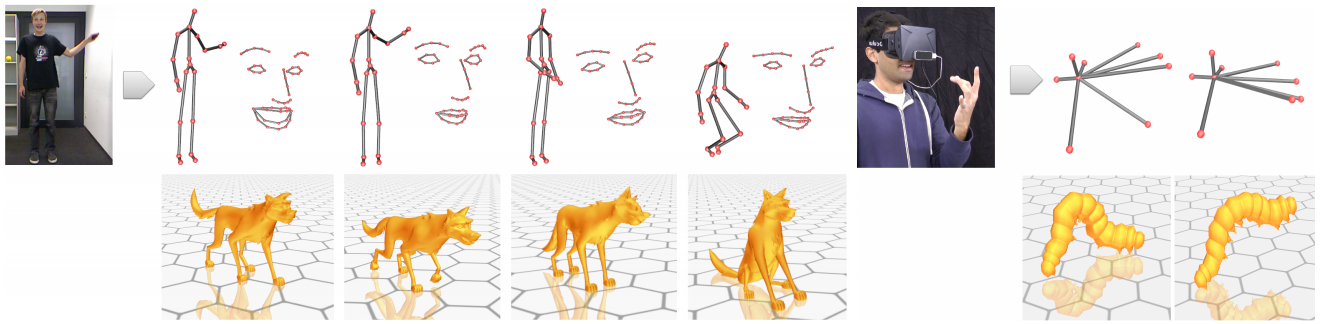

Motion-tracked real-time character control is important for games and VR, but current solutions are limited: retargeting is hard for nonhuman characters, with locomotion bound to the sensing volume; and pose mappings are ambiguous with difficult dynamic motion control. We robustly estimate wave properties — amplitude, frequency, and phase — for a set of interactively-defined gestures by mapping user motions to a low-dimensional independent representation. The mapping separates simultaneous or intersecting gestures, and extrapolates gesture variations from single training examples. For animations such as locomotion, wave properties map naturally to stride length, step frequency, and progression, and allow smooth transitions from standing, to walking, to running. Interpolating outof-phase locomotions is hard, e.g., quadruped legs between walks and runs switch phase, so we introduce a new time-interpolation scheme to reduce artifacts. These improvements to real-time motiontracked character control are important for common cyclic animations. We validate this in a user study, and show versatility to apply to part- and full-body motions across a variety of sensors.