Hierarchical Bayesian neural networks for personalized classification

Neural Information Processing Systems Workshop on Bayesian Deep Learning, 2016.

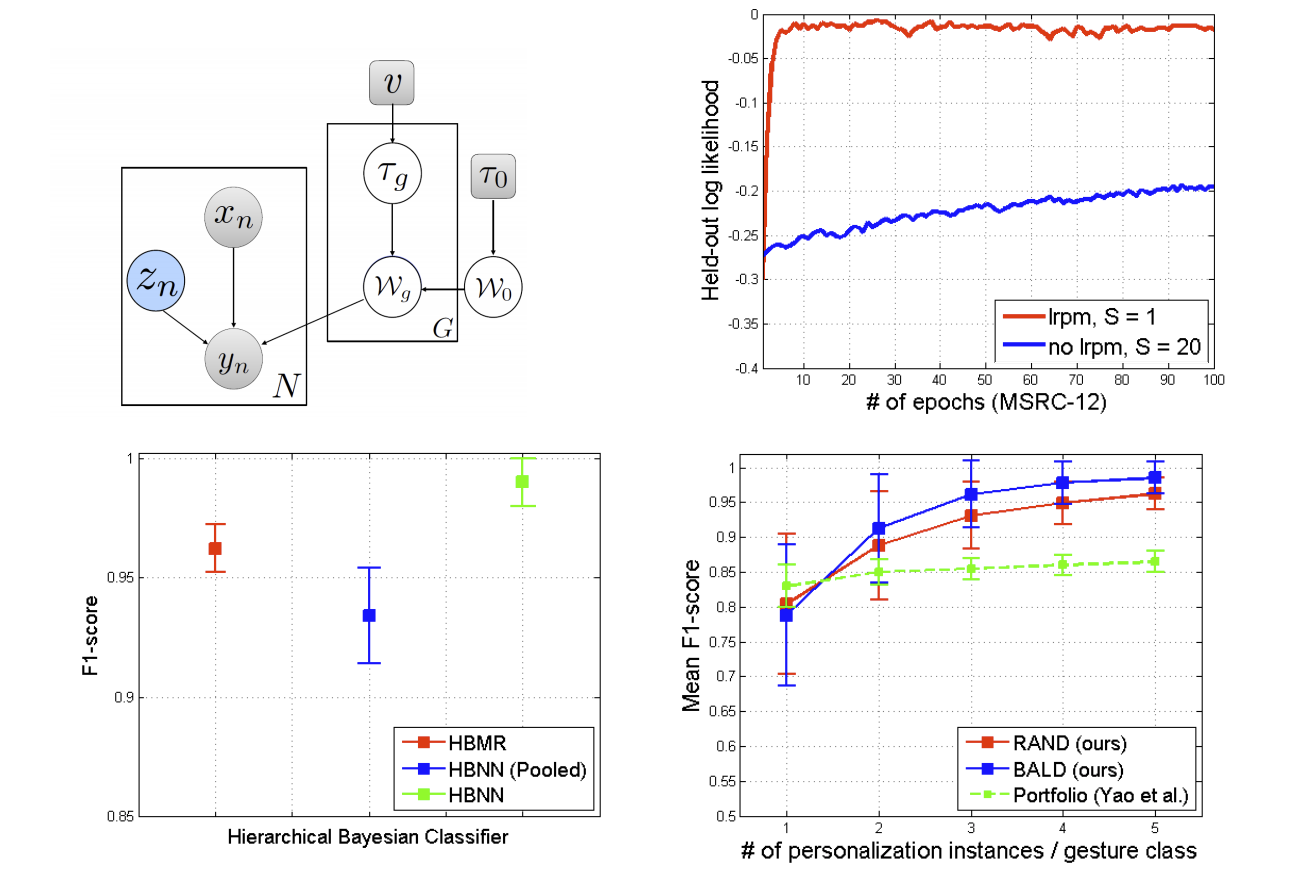

Building robust classifiers trained on data susceptible to group or subject-specific variations is a challenging yet common problem in pattern recognition. Hierarchical models allow sharing of statistical strength across groups while preserving group-specific idiosyncrasies, and are commonly used for modeling such grouped data [3]. We develop flexible hierarchical Bayesian models that parameterize group-specific conditional distributions p(yg | xg,Wg) via multi-layered Bayesian neural networks. Sharing of statistical strength between groups allows us to learn large networks even when only a handful of labeled examples are available. We leverage recently proposed doubly stochastic variational Bayes algorithms to infer a full posterior distribution over the weights while scaling to large architectures. We find the inferred posterior leads to both improved classification performance and to more effective active learning for iteratively labeling data. Finally, we demonstrate state-of-the-art performance on the MSRC-12 Kinect Gesture Dataset [2].