MoRA: LoRA Guided Multi-modal Disease Diagnosis with Missing Modality

International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), 2024.

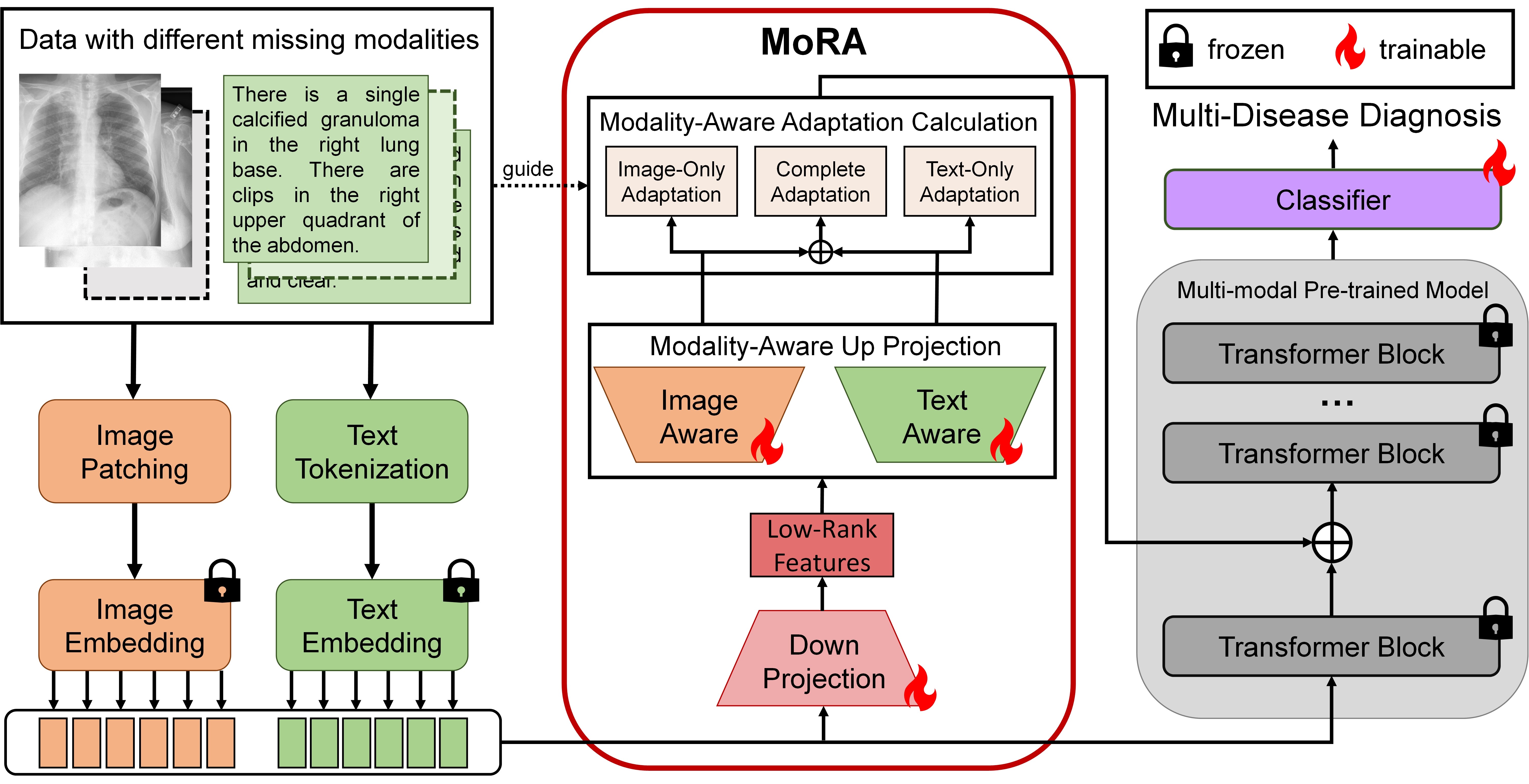

Multi-modal pre-trained models efficiently extract and fuse features from different modalities with low memory requirements for fine-tuning. Despite this efficiency, their application in disease diagnosis is under-explored. A significant challenge is the frequent occurrence of missing modalities, which impairs performance. Additionally, fine-tuning the entire pre-trained model demands substantial computational resources. To address these issues, we introduce Modality-aware Low-Rank Adaptation (MoRA), a computationally efficient method. MoRA projects each input to a low intrinsic dimension but uses different modality-aware up-projections for modality-specific adaptation in cases of missing modalities. Practically, MoRA integrates into the first block of the model, significantly improving performance when a modality is missing. It requires minimal computational resources, with less than 1.6% of the trainable parameters needed compared to training the entire model. Experimental results show that MoRA outperforms existing techniques in disease diagnosis, demonstrating superior performance, robustness, and training efficiency.

Acknowledgements

We thank all affiliates of the Harvard Visual Computing Group for their valuable feedback. This work was supported by NIH grant R01HD104969 and NIH grant 1U01NS132158.