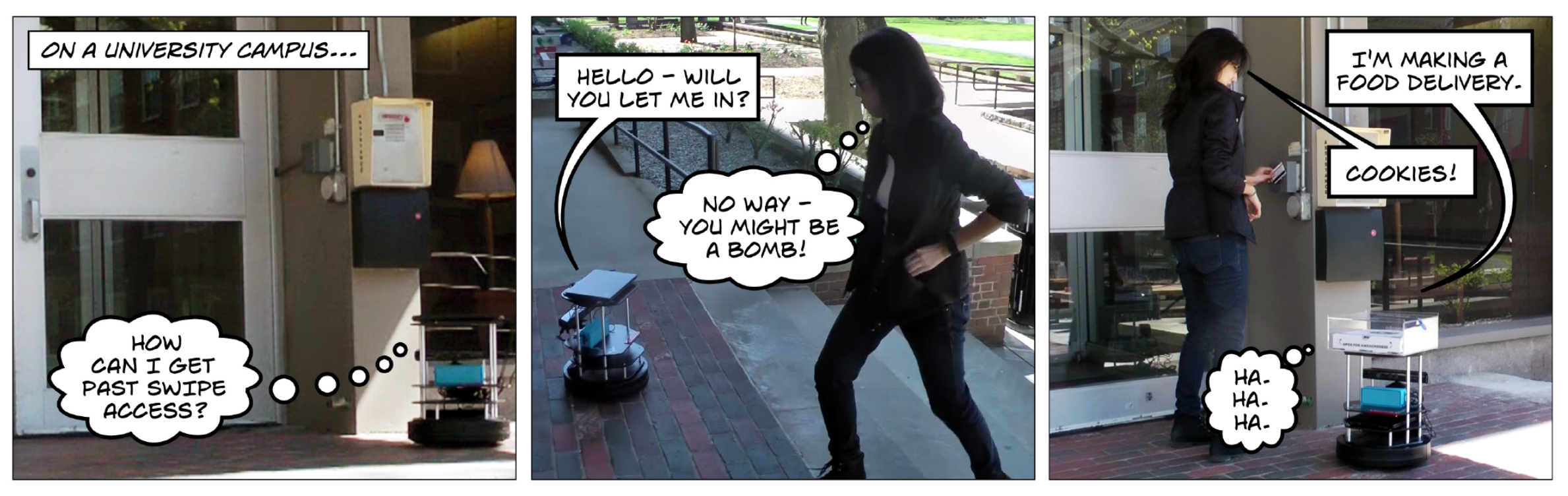

Piggybacking Robots: Human-Robot Overtrust in University Dormitory Security

ACM: Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, 2017.

Can overtrust in robots compromise physical security? We conducted a series of experiments in which a robot positioned outside a secure-access student dormitory asked passersby to assist it to gain access. We found individual participants were as likely to assist the robot in exiting the dormitory (40% assistance rate, 4/10 individuals) as in entering (19%, 3/16 individuals). Groups of people were more likely than individuals to assist the robot in entering (71%, 10/14 groups). When the robot was disguised as a food delivery agent for the fictional start-up Robot Grub, individuals were more likely to assist the robot in entering (76%, 16/21 individuals). Lastly, we found participants who identified the robot as a bomb threat demonstrated a trend toward assisting the robot (87%, 7/8 individuals, 6/7 groups). Thus, we demonstrate that overtrust-the unfounded belief that the robot does not intend to deceive or carry risk-can represent a significant threat to physical security at a university dormitory.