reVISit: Looking Under the Hood of Interactive Visualization Studies

Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, 2021.

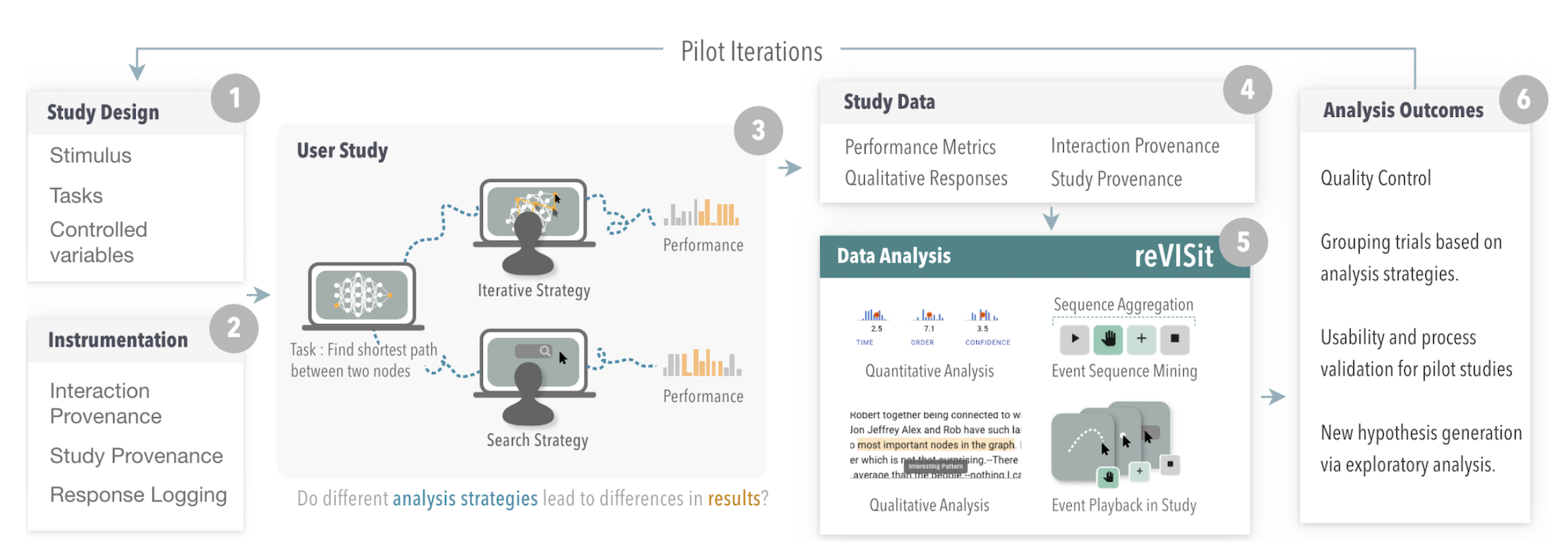

Quantifying user performance with metrics such as time and accuracy does not show the whole picture when researchers evaluate complex, interactive visualization tools. In such systems, performance is often influenced by different analysis strategies that statistical analysis methods cannot account for. To remedy this lack of nuance, we propose a novel analysis methodology for evaluating complex interactive visualizations at scale. We implement our analysis methods in reVISit, which enables analysts to explore participant interaction performance metrics and responses in the context of users’ analysis strategies. Replays of participant sessions can aid in identifying usability problems during pilot studies and make individual analysis processes salient. To demonstrate the applicability of reVISit to visualization studies, we analyze participant data from two published crowdsourced studies. Our findings show that reVISit can be used to reveal and describe novel interaction patterns, to analyze performance differences between different analysis strategies, and to validate or challenge design decisions.