The Quest for Omnioculars: Embedded Visualization for Augmenting Basketball Game Viewing Experiences

IEEE Transactions on Visualization and Computer Graphics (IEEE VIS), 2022.

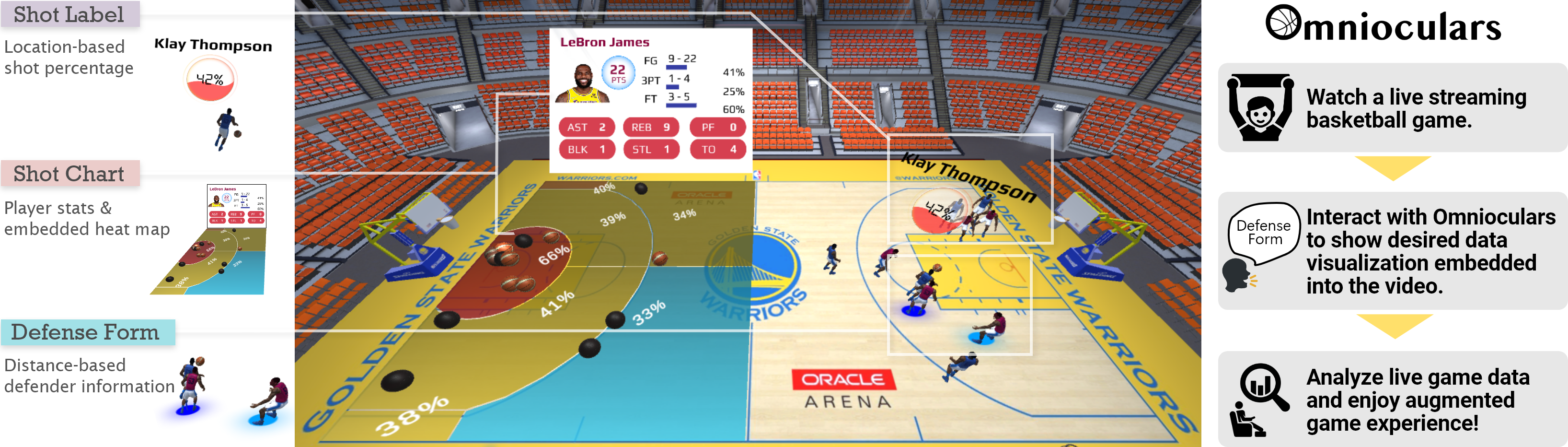

Sports game data is becoming increasingly complex, often consisting of multivariate data such as player performance stats, historical team records, and athletes’ positional tracking information. While numerous visual analytics systems have been developed for sports analysts to derive insights, few tools target fans to improve their understanding and engagement of sports data during live games. By presenting extra data in the actual game views, embedded visualization has the potential to enhance fans’ game-viewing experience. However, little is known about how to design such kinds of visualizations embedded into live games. In this work, we present a user-centered design study of developing interactive embedded visualizations for basketball fans to improve their live game-watching experiences. We first conducted a formative study to characterize basketball fans’ in-game analysis behaviors and tasks. Based on our findings, we propose a design framework to inform the design of embedded visualizations based on specific data-seeking contexts. Following the design framework, we present five novel embedded visualization designs targeting five representative contexts identified by the fans, including shooting, offense, defense, player evaluation, and team comparison. We then developed Omnioculars, an interactive basketball game-viewing prototype that features the proposed embedded visualizations for fans’ in-game data analysis. We evaluated Omnioculars in a simulated basketball game with basketball fans. The study results suggest that our design supports personalized in-game data analysis and enhances game understanding and engagement.

Acknowledgements

This work is supported by NSF grants III-2107328 and IIS-1901030. We thank Hans H., Joan C., Harvey H., Ahmed S., Nhan H., and Max M. for their time.