What eye movement and memory experiments can tell us about the human perception of visualizations

Journal of Vision, 2017.

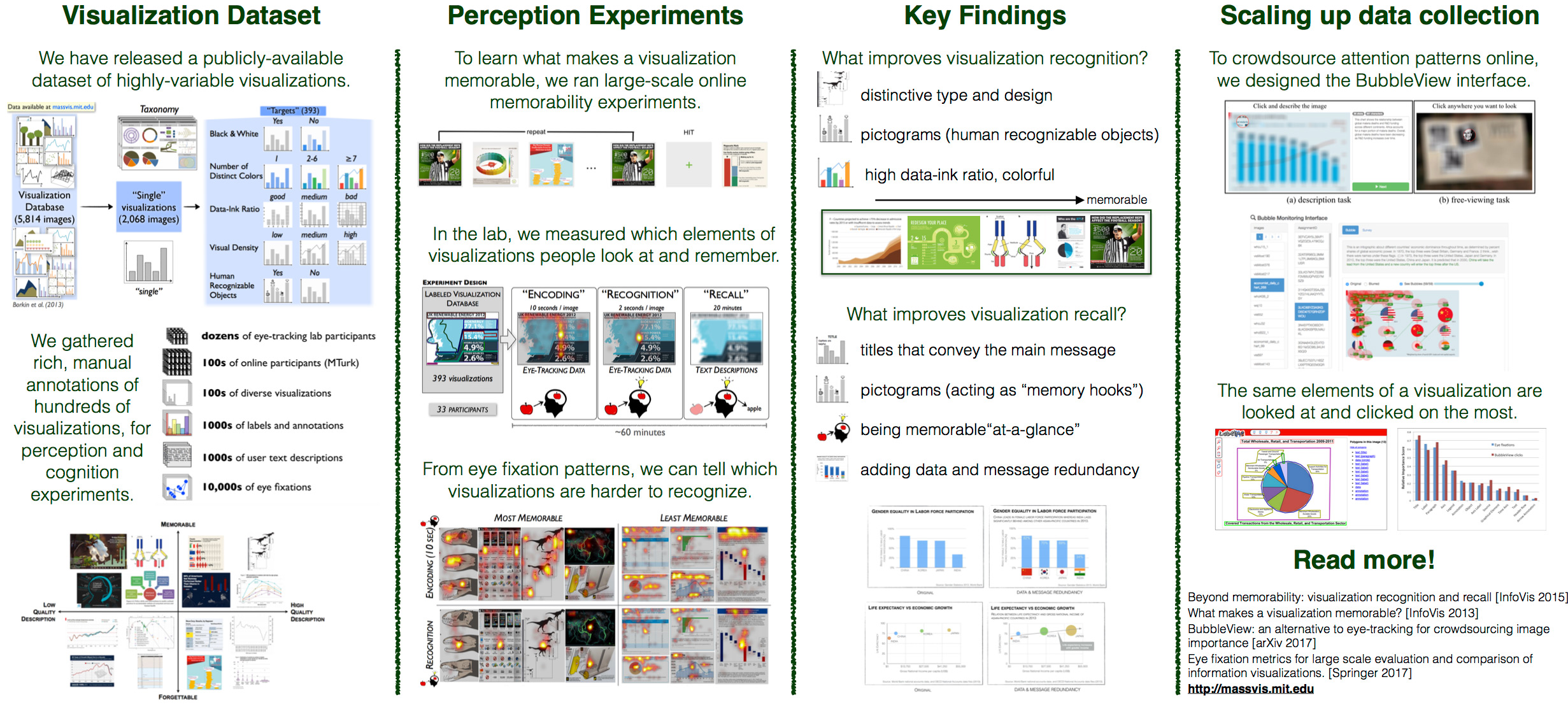

What makes a visualization (a graph, chart, or infographic) memorable? Where do people look on a visualization during encoding and retrieval? What aspects of the visualization can later be recalled from memory? Studying these questions in the context of visualizations allows us to expand our understanding of human perception to complex visual content beyond natural scenes. We ran a series of experiments in the lab and on the crowdsourcing platform Amazon s Mechanical Turk (MTurk). In the lab, we recorded people s eye movements as they viewed infographics for 10 seconds each, with a retrieval task at the end of 20 minutes. In the final phase, participants provided descriptions of the visualizations from memory. On MTurk, we ran a series of memorability tests, and separately, a series of description tasks where participants clicked on blurred visualizations to reveal small bubble-like regions at full resolution. We compared the consistency of human memory and the consistency of human attention on visualizations, in lab and online settings. First, we find that people are consistent in where they attend and what they remember in visualizations. Second, we discover some qualities of visualizations that tend to make them more memorable. Importantly, we show that a visualization that is memorable at-a-glance can often also be recalled and described with higher fidelity. Third, we quantify which elements of visualizations people tend to focus their attention most on. We find that text, and especially titles, are particularly important; and that visual elements are not as distracting as initially hypothesized. Finally, we make our full dataset of thousands of visualizations, eye movements, memory scores, and text descriptions available to the community (massvis.mit.edu). This dataset presents the opportunity of delving into a variety of old and new perception questions using a novel image type for such investigations.