To Which Out-Of-Distribution Object Orientations Are DNNs Capable of Generalizing?

arXiv preprint arXiv:2109.13445, 2021.

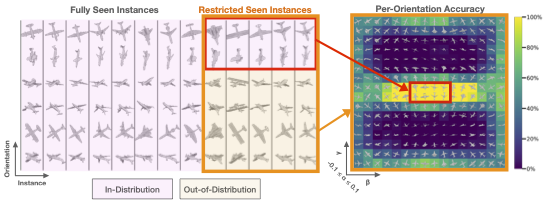

The capability of Deep Neural Networks (DNNs) to recognize objects in orientations outside the distribution of the training data, i.e., out-of-distribution (OOD) orientations, is not well understood. For humans, behavioral studies showed that recognition accuracy varies across OOD orientations, where generalization is much better for some orientations than for others. In contrast, for DNNs, it remains unknown how generalization abilities are distributed among OOD orientations. In this paper, we investigate the limitations of DNNs’ generalization capacities by systematically inspecting patterns of success and failure of DNNs across OOD orientations. We use an intuitive and controlled, yet challenging learning paradigm, in which some instances of an object category are seen at only a few geometrically restricted orientations, while other instances are seen at all orientations. The effect of data diversity is also investigated by increasing the number of instances seen at all orientations in the training set. We present a comprehensive analysis of DNNs’ generalization abilities and limitations for representative architectures (ResNet, Inception, DenseNet and CORnet). Our results reveal an intriguing pattern---DNNs are only capable of generalizing to instances of objects that appear like 2D, ie in-plane, rotations of in-distribution orientations.