When and how convolutional neural networks generalize to out-of-distribution category–viewpoint combinations

Nature Machine Intelligence, 2022.

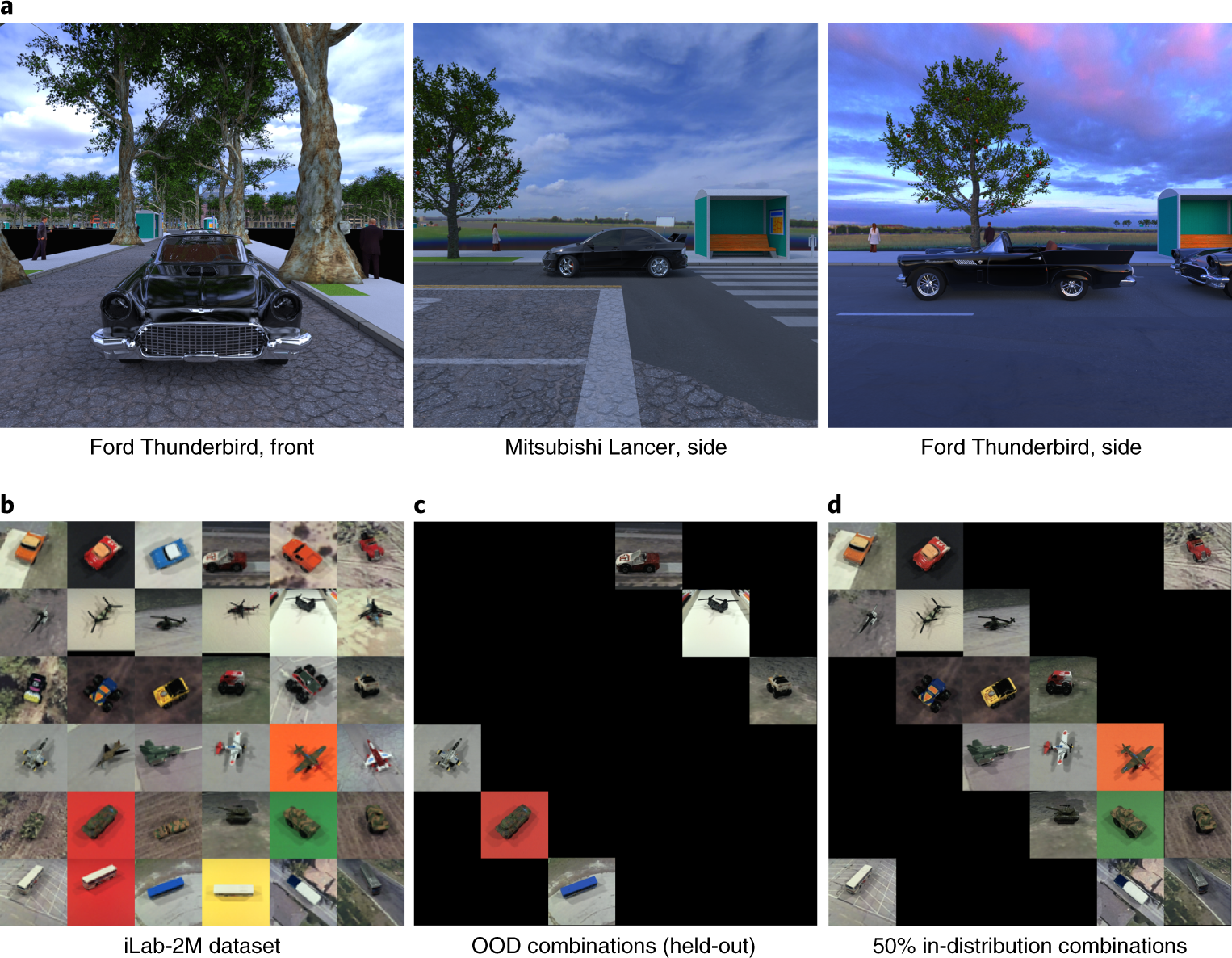

Object recognition and viewpoint estimation lie at the heart of visual understanding. Recent studies have suggested that convolutional neural networks (CNNs) fail to generalize to out-of-distribution (OOD) category–viewpoint combinations, that is, combinations not seen during training. Here we investigate when and how such OOD generalization may be possible by evaluating CNNs trained to classify both object category and three-dimensional viewpoint on OOD combinations, and identifying the neural mechanisms that facilitate such OOD generalization. We show that increasing the number of in-distribution combinations (data diversity) substantially improves generalization to OOD combinations, even with the same amount of training data. We compare learning category and viewpoint in separate and shared network architectures, and observe starkly different trends on in-distribution and OOD combinations, that is, while shared networks are helpful in distribution, separate networks significantly outperform shared ones at OOD combinations. Finally, we demonstrate that such OOD generalization is facilitated by the neural mechanism of specialization, that is, the emergence of two types of neuron—neurons selective to category and invariant to viewpoint, and vice versa.

Acknowledgements

We are grateful to Tomaso Poggio and Pawan Sinha for their insightful advice and warm encour- agement. This work has been partially supported by NSF grant IIS-1901030, a Google Faculty Research Award, the Toyota Research Institute, the Center for Brains, Minds and Machines (funded by NSF STC award CCF-1231216), Fujitsu Laboratories Ltd. (Contract No. 40008819) and the MIT-Sensetime Alliance on Artificial Intelligence. We also thank Kumaraditya Gupta for help with the figures, and Prafull Sharma for insightful discussions.